Test prompts on datasets

Experiment with your datasets in prompt playground

When modifying a prompt in the playground, you can test your new prompt across a dataset of examples to validate that the model is hill climbing in terms of performance across challenging examples, without regressing on core business use cases.

Step 1: Set a Dataset

Follow this guide to upload your dataset to Arize AX Create a dataset

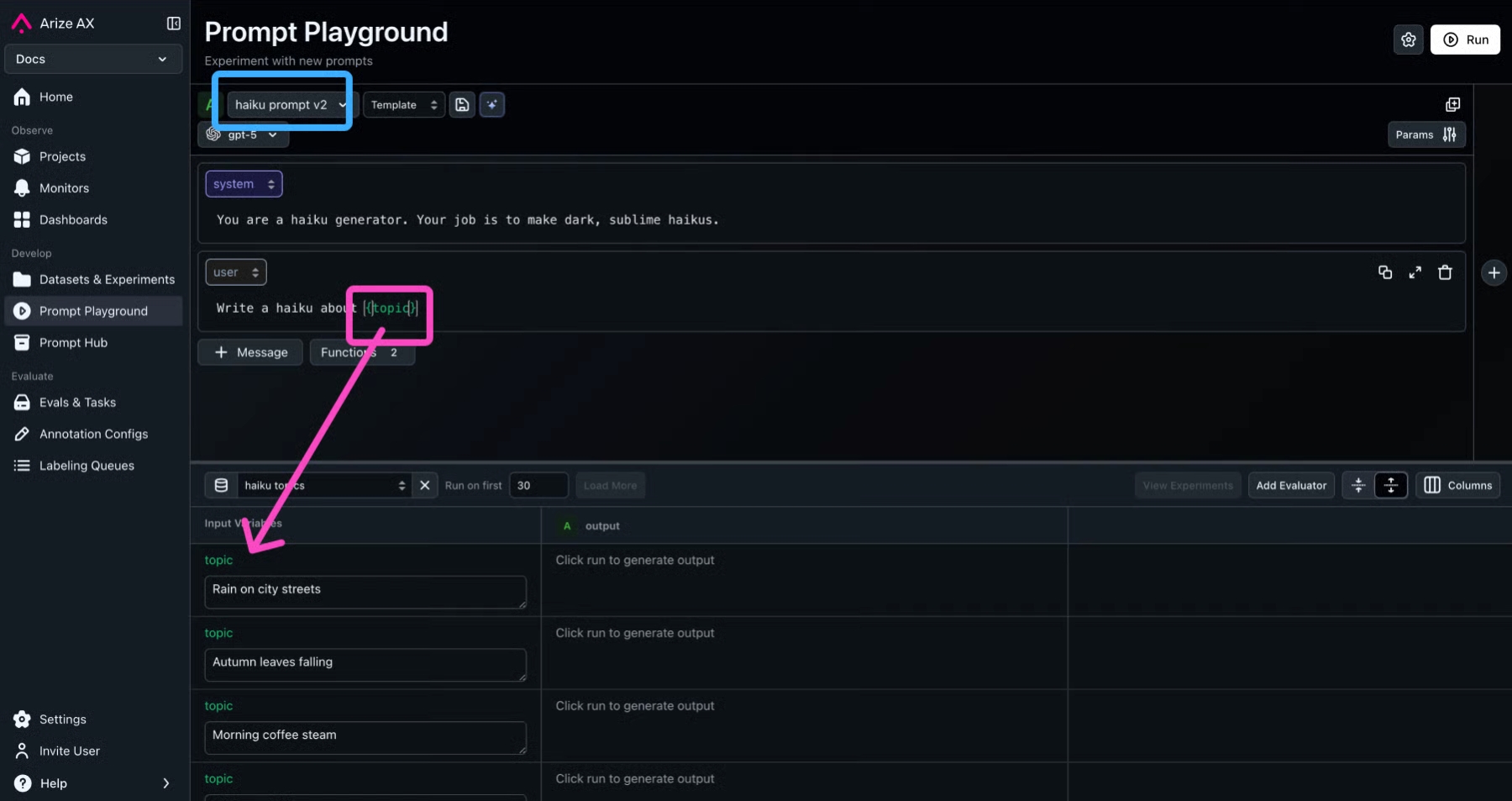

Go back to the prompt playground, and choose your dataset from the Select a Dataset dropdown

Step 2: Set your Prompt

Load your prompt from the Prompt Hub, using the Select a template from prompt hub dropdown

OR, fill in a new prompt (See more: Create a Prompt )

Include variables from your dataset in the prompt, inside curly braces

Step 3: Add Evaluators

Select Add Evaluator to add evaluators to evaluate outputs generated by this experiment.

Add a Code Eval

Write a programmatic evaluator if you'd like to use code to judge your experiment outputs.

Learn more here: Code Evals

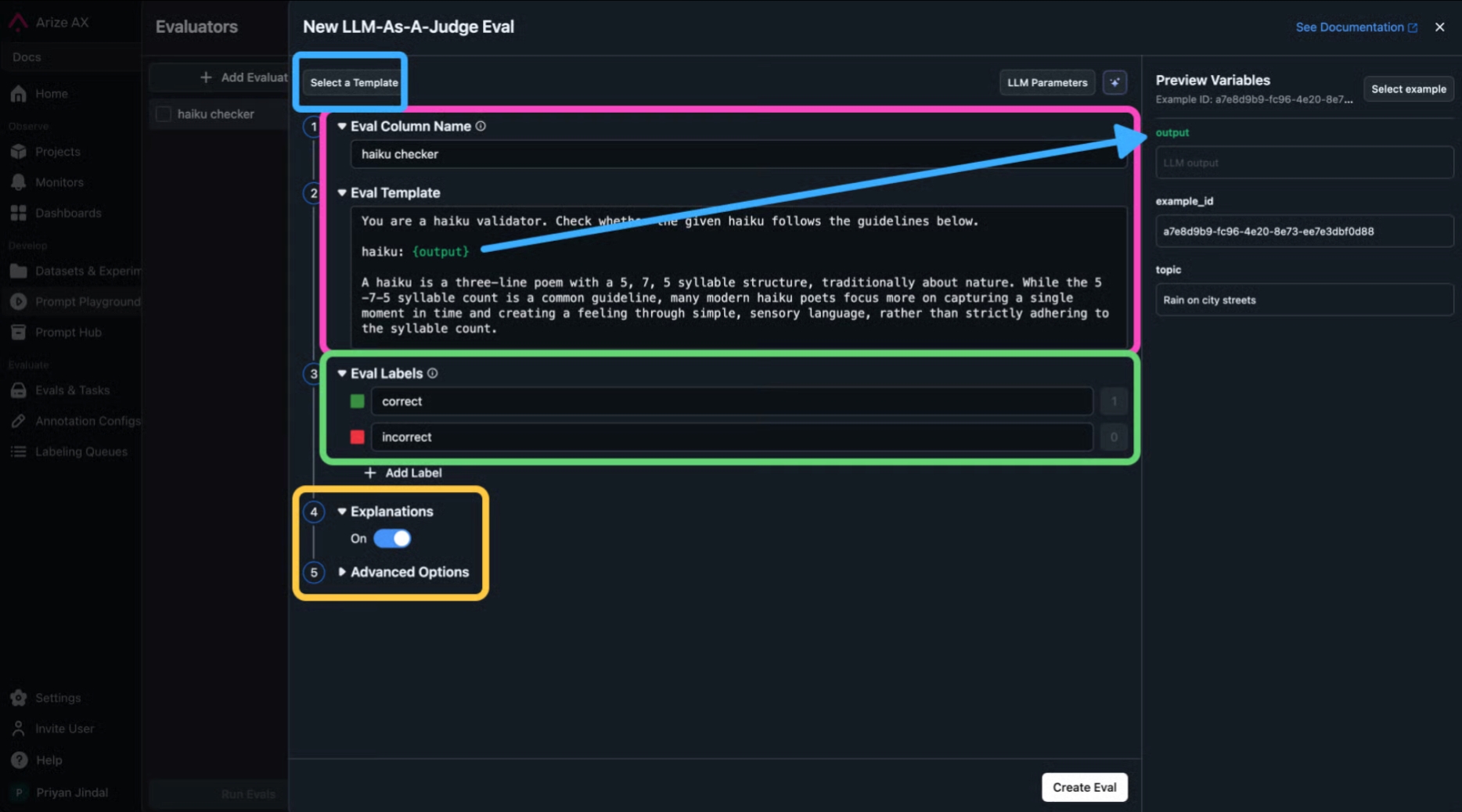

Add an LLM as Judge Evaluator

Use an LLM to judge your experiment outputs. Learn more: LLM as a Judge

Select one our Arize eval templates

OR write your own. Make sure to embed variabes from the dataset, so that the evaluator has something to evaluate

Set your eval labels. These are the labels the evaluator will pick from, when judging the output

Set explanations on/off. Explanations are short reasoning blobs that the LLM will generate to explain its reasoning. Also set advanced options.

Click Create Eval once you are done.

Step 4: Run Experiment

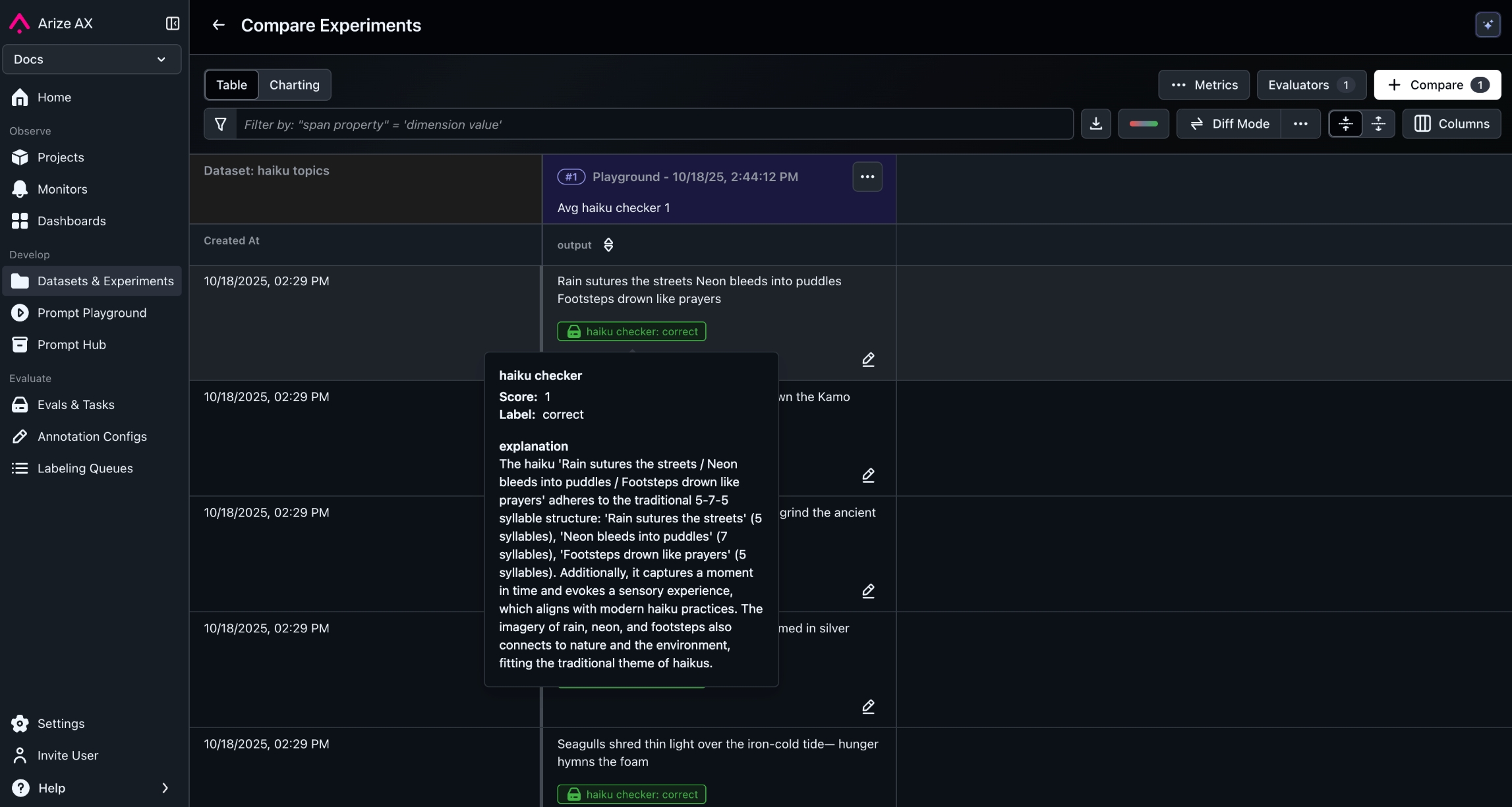

Once you hit Run, the experiment run will trigger.

Hit View Experiment to get a detailed view of your experiment run.

Hover over the eval label to see the eval explanation.

Last updated

Was this helpful?