OpenLLMetry

OpenLLMetry integration for sending observability data to Arize with OpenInference semantic conventions

Overview

OpenLLMetry is an open-source observability package for LLM applications that provides automatic instrumentation for popular LLM frameworks and providers. This integration enables you to send OpenLLMetry traces to Arize using OpenInference semantic conventions.

Integration Type

Tracing Integration

Key Features

Automatic instrumentation for 20+ LLM providers and frameworks

Seamless conversion to OpenInference semantic conventions

Real-time trace collection and analysis in Arize

Support for complex LLM workflows and chains

Prerequisites

Arize account with Space ID and API Key

OpenLLMetry and OpenTelemetry packages

Target LLM provider credentials (e.g., OpenAI API key)

Installation

pip install openinference-instrumentation-openllmetryQuickstart

This quickstart shows you how to view your OpenLLMetry traces in Phoenix.

Install required packages.

pip install arize-otel opentelemetry-sdk opentelemetry-exporter-otlp opentelemetry-instrumentation-openaiHere's a simple example that demonstrates how to view convert OpenLLMetry traces into OpenInference and view those traces in Phoenix:

import os

import grpc

import openai

from opentelemetry.sdk.trace.export import BatchSpanProcessor

from opentelemetry.exporter.otlp.proto.grpc.trace_exporter import OTLPSpanExporter

from arize.otel import register

from openinference.instrumentation.openllmetry import OpenInferenceSpanProcessor

from opentelemetry.instrumentation.openai import OpenAIInstrumentor

# Set your OpenAI API key

os.environ["OPENAI_API_KEY"] = "YOUR_OPENAI_API_KEY"

# Set up Arize credentials

SPACE_ID = os.getenv("SPACE_ID")

API_KEY = os.getenv("API_KEY")

tracer_provider = register(

space_id=SPACE_ID,

api_key=API_KEY,

project_name="openllmetry-integration",

set_global_tracer_provider=True,

)

tracer_provider.add_span_processor(OpenInferenceSpanProcessor())

tracer_provider.add_span_processor(

BatchSpanProcessor(

OTLPSpanExporter(

endpoint="http://localhost:4317", #if using phoenix cloud, change to phoenix cloud endpoint (phoenix cloud space -> settings -> endpoint/hostname)

headers={

"authorization": f"Bearer {API_KEY}",

"api_key": API_KEY,

"arize-space-id": SPACE_ID,

"arize-interface": "python",

"user-agent": "arize-python",

},

compression=grpc.Compression.Gzip, # use enum instead of string

)

)

)

OpenAIInstrumentor().instrument(tracer_provider=tracer_provider)

# Define and invoke your OpenAI model

client = openai.OpenAI()

messages = [

{"role": "user", "content": "What is the national food of Yemen?"}

]

response = client.chat.completions.create(

model="gpt-4",

messages=messages,

)

# Now view your converted OpenLLMetry traces in Phoenix!This example:

Uses OpenLLMetry Instrumentor to instrument the application.

Defines a simple OpenAI model and runs a query

Queries are exported to Arize using a span processor.

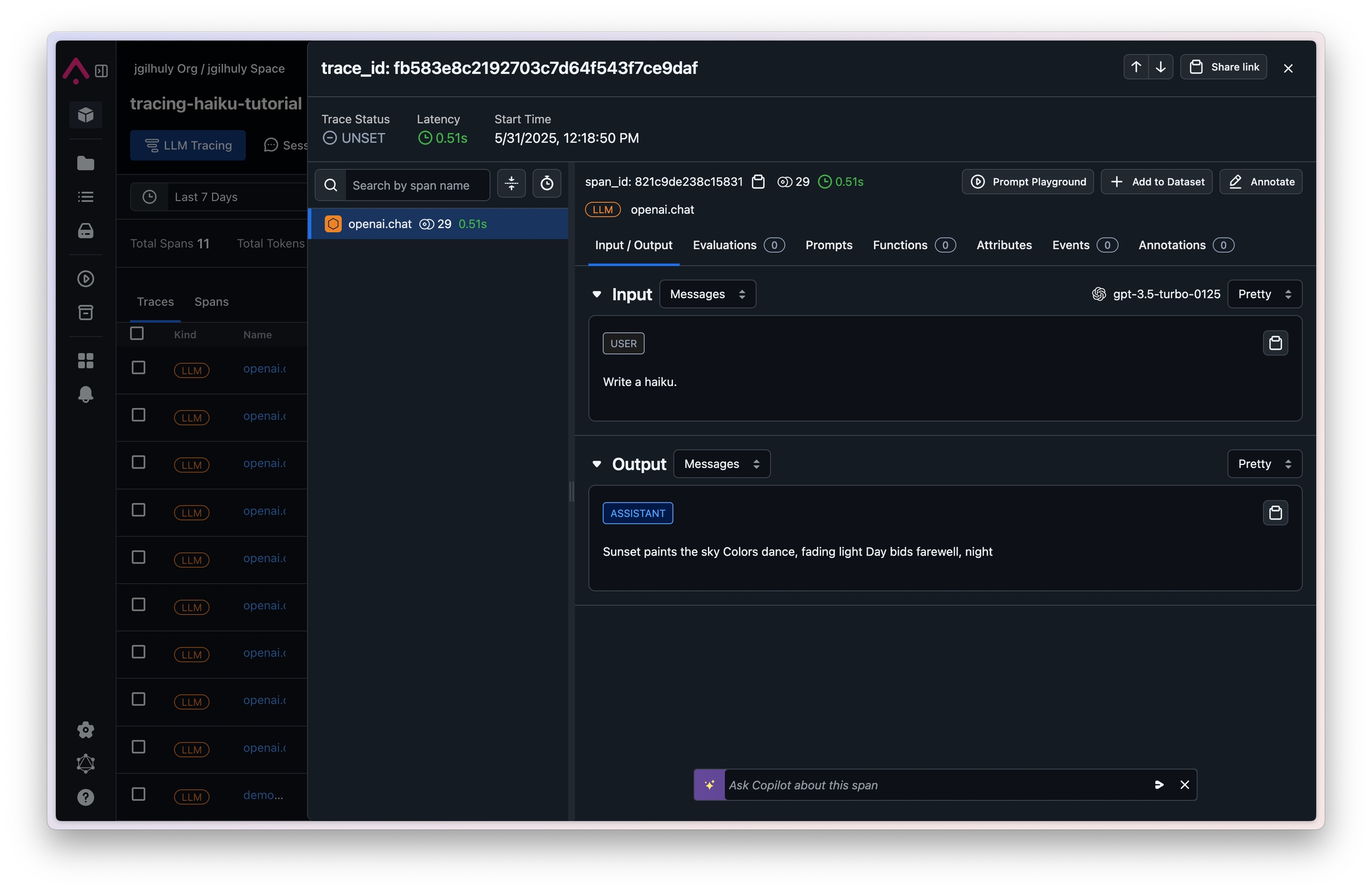

The traces will be visible in the Phoenix UI at http://localhost:6006.

Last updated

Was this helpful?