Traceloop SDK

Traceloop SDK integration for sending observability data to Arize with OpenInference semantic conventions

Overview

Traceloop SDK is a high-level observability SDK for LLM applications that provides automatic instrumentation with minimal setup. This integration enables you to send Traceloop traces to Arize using OpenInference semantic conventions through a simplified SDK approach.

Integration Type

Tracing Integration

Key Features

One-line initialization with

Traceloop.init()Automatic instrumentation for 20+ LLM providers and frameworks

Seamless conversion to OpenInference semantic conventions

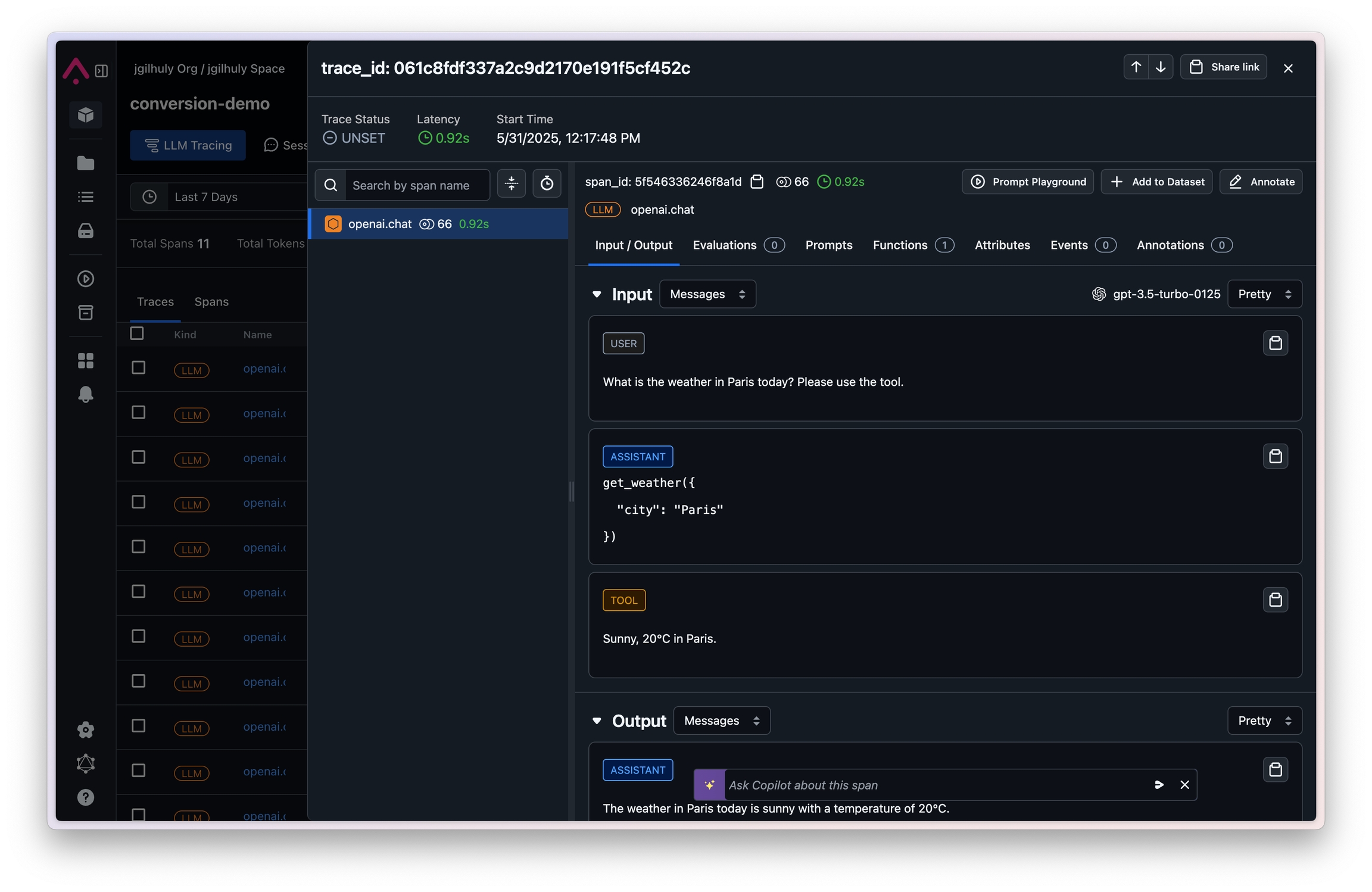

Real-time trace collection and analysis in Arize

Support for complex LLM workflows and function calling

Prerequisites

Arize account with Space ID and API Key

Python 3.8 or higher

Traceloop SDK and OpenTelemetry packages

Target LLM provider credentials (e.g., OpenAI API key)

Installation

Install the required packages:

For specific LLM providers, ensure you have their respective packages:

Quick Start

Download the OpenInference Span Processor

Download the script that converts Traceloop spans to OpenInference format:

Mac/Linux:

Windows (PowerShell):

Basic Setup

The OpenLLMetryToOpenInferenceSpanProcessor is a custom span processor that maps Traceloop trace attributes to OpenInference semantic conventions.

Complete Example

Here's a complete working example with OpenAI function calling:

Configuration Options

Environment Variables

Set up your environment variables for seamless configuration:

Supported LLM Providers

Traceloop SDK supports automatic instrumentation for:

LLM Providers: OpenAI, Anthropic, Azure OpenAI, Cohere, Replicate, Hugging Face, and more

Vector Databases: Pinecone, ChromaDB, Weaviate, Qdrant

Frameworks: LangChain, LlamaIndex, Haystack, CrewAI

Databases: Redis, SQL databases

For a complete list, see the Traceloop documentation.

OpenInference Semantic Conventions

When traces are processed through the OpenInference converter, the following attributes are standardized:

Input/Output Attributes

input.mime_type: Set to "application/json"input.value: JSON string of prompt and parametersoutput.value: LLM response contentoutput.mime_type: Response content type

LLM-Specific Attributes

llm.model_name: The model identifierllm.provider: The LLM provider namellm.token_count.prompt: Input token countllm.token_count.completion: Output token countopeninference.span.kind: Set to "LLM"

Message Attributes

llm.input_messages: Array of input messagesllm.output_messages: Array of output messagesMessage roles: system, user, assistant, function

Function Call Attributes

llm.input_messages.*.tool_calls: Function call requestsllm.output_messages.*.tool_calls: Function call responsesFunction schemas and execution results

Troubleshooting

Common Issues

Missing Traces

If traces aren't appearing in Arize:

Verify your Space ID and API Key are correct

Check network connectivity to

otlp.arize.com:443Ensure the OpenInference converter is properly configured

Verify Traceloop initialization completed successfully

Incorrect Span Format

If spans appear malformed:

Verify the OpenLLMetryToOpenInferenceSpanProcessor is added before Traceloop.init()

Check that all required OpenInference attributes are present

Validate the span processor order in your configuration

Function Calls Not Traced

If function calls aren't being traced:

Ensure you're using supported function calling patterns

Verify the tool definitions are properly formatted

Check that the model supports function calling

Debug Mode

Enable debug logging to troubleshoot issues:

Support

Last updated

Was this helpful?