OTel Collector Deployment Patterns

Telemetry Routing via OpenTelemetry Collector

Overview and Motivation

This document outlines the proposed architecture for leveraging the open-source OpenTelemetry Collector as an intermediate telemetry processing and routing layer. This pattern facilitates the collection, processing, and distribution of telemetry data (logs, metrics, traces) from instrumented applications to various observability backends such as Arize.

In modern distributed systems, observability data must be efficiently collected and routed to different backend systems for analysis, alerting, and visualization. Direct instrumentation to each backend can lead to tightly coupled systems, increased maintenance complexity, and limited flexibility. Using an OpenTelemetry Collector provides a decoupled, scalable, and extensible approach to telemetry routing.

Architecture Components

1. Instrumented LLM Applications

LLM applications are instrumented manually with the OpenTelemetry SDK, or with an auto-instrumentor which uses OTel under the hood.

Applications export telemetry to a locally deployed or central OpenTelemetry Collector.

2. OpenTelemetry Collector

Acts as a gateway or agent.

Collects telemetry data from applications.

Applies optional processing and transformation.

Routes data to one or more configured backends.

3. Backends

Primary: Arize

Secondary (optional): Kafka, Prometheus, etc.

Deployment Models

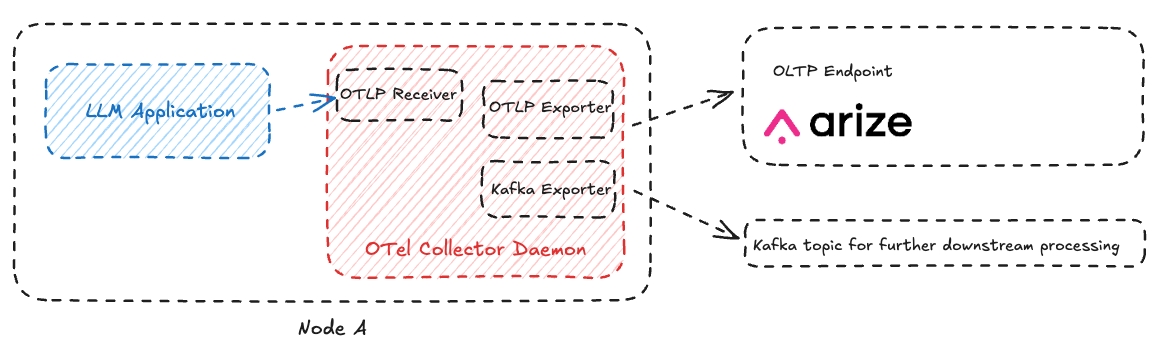

Agent Mode (not to be confused with LLM agents)

Deployment: Collector instance is running with the application or on the same host as the application (e.g., as a sidecar or daemonset)

Advantages (from OTel docs):

Simple to get started

Clear 1:1 mapping between application and collector

Considerations (from OTel docs):

Scalability (human and load-wise)

Not as flexible as other approaches

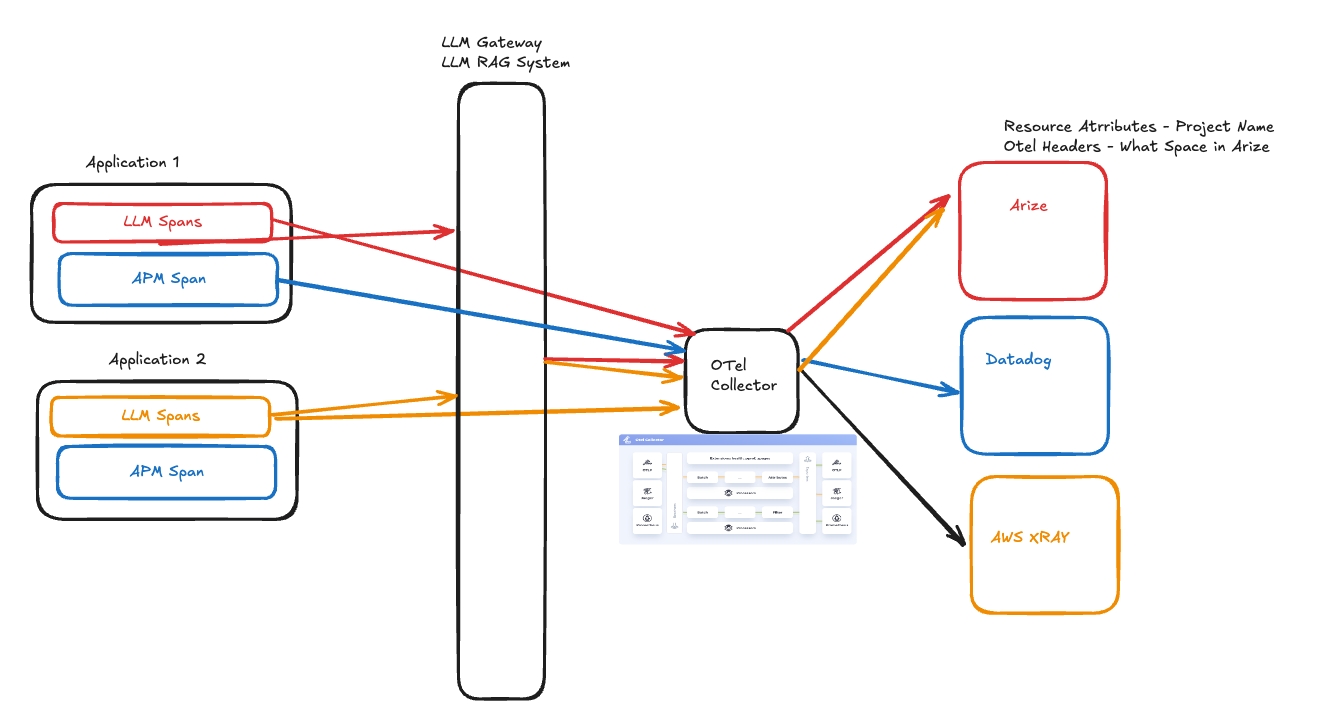

Gateway Mode

Deployment: A centralized or regionalized collector service receives telemetry from multiple agents or directly from applications.

Advantages (from OTel docs):

Separation of concerns such as centrally managed credentials

Centralized policy management (e.g., filtering or sampling spans)

Considerations (from OTel docs):

Increased complexity - additional service to maintain and that can fail

Added latency in case of cascaded collectors

Higher overall resource usage (costs)

Hybrid Model

Deployment: Combines Agent and Gateway modes.

Advantages:

Distributed data collection and centralized processing.

Scales well in large environments.

Data Flow

The application emits telemetry data using one of the Arize auto-instrumentors or the OTel SDK.

Telemetry is sent to an OpenTelemetry Collector.

The Collector applies processors (e.g., filtering, batching, sampling).

The Collector exports the telemetry to configured backends like Arize.

Example Configuration File

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

processors:

batch:

timeout: 5s

send_batch_size: 2048

resource/model_id:

attributes:

- action: insert

key: model_id

value: "ARIZE PROJECT ID GOES HERE"

exporters:

otlp/arize:

endpoint: "otlp.arize.com:443"

headers:

api_key: "API KEY REDACTED"

space_id: "SPACE ID REDACTED"

timeout: 20s

retry_on_failure:

enabled: true

initial_interval: 1s

max_interval: 10s

max_elapsed_time: 0

service:

telemetry:

metrics:

level: none

pipelines:

traces:

receivers: [otlp]

processors: [resource/model_id, batch]

exporters: [otlp/arize] Example Architecture

Benefits of This Design

✅ Decoupled Instrumentation

Applications do not need to manage backend-specific exporters or credentials, reducing code complexity.

✅ Multi-Destination Exporting

Collectors can fan out telemetry data to multiple destinations (e.g., Arize and Kafka)

✅ Consistent Observability Pipeline

Centralizes telemetry processing (e.g., sampling, filtering), ensuring consistent policies across all services.

✅ Scalability and Reliability

Collectors support load balancing and can be scaled horizontally. They also offer buffering and retries, increasing telemetry delivery reliability.

✅ Security and Compliance

Sensitive credentials (e.g., Arize API keys) are stored and managed centrally, not within application code.

✅ Extensibility

New exporters and processors can be added to the Collector without changing the application instrumentation.

Advanced Collector Configuration Examples

Dynamic Trace Routing

A common requirement for teams using Arize is the ability to route traces to different projects dynamically from the same source application. When you set up tracing, the project ID, space ID, and API key are typically fixed and cannot be changed without shutting down the tracer provider and reinstantiating it with new credentials.

Our recommended approach for this use case is to deploy a custom OpenTelemetry Collector that handles dynamic routing based on span attributes.

How It Works

Application Setup: Your application sets specific span attributes (

metadata.project_name,metadata.space_id) to indicate the target projectCollector Processing: The OpenTelemetry Collector receives traces and uses these attributes to determine routing

Dynamic Export: Based on the span attributes, traces are automatically routed to the appropriate Arize project

Configuration Example

Below is an advanced collector configuration that demonstrates dynamic routing to multiple Arize projects:

extensions:

headers_setter/space1:

headers:

- key: space_id

value: space_id_1

action: upsert

- key: api_key

value: api_key_1

action: upsert

headers_setter/space2:

headers:

- key: space_id

value: space_id_2

action: upsert

- key: api_key

value: api_key_2

action: upsert

processors:

transform:

error_mode: ignore

trace_statements:

- set(resource.attributes["openinference.project.name"], span.attributes["metadata.project_name"])

- set(resource.attributes["space_id"], span.attributes["metadata.space_id"])

connectors:

routing:

default_pipelines: [traces/space1]

table:

- context: resource

condition: resource.attributes["space_id"] == "space_id_1"

pipelines: [traces/space1]

- context: resource

condition: resource.attributes["space_id"] == "space_id_2"

pipelines: [traces/space2]

receivers:

otlp:

protocols:

grpc:

endpoint: 0.0.0.0:4317

http:

endpoint: 0.0.0.0:4318

exporters:

otlp/space1:

endpoint: "otlp.arize.com:443"

auth:

authenticator: headers_setter/space1

otlp/space2:

endpoint: "otlp.arize.com:443"

auth:

authenticator: headers_setter/space2

service:

extensions: [headers_setter/space1, headers_setter/space2]

pipelines:

traces:

receivers: [otlp]

processors: [transform]

exporters: [routing]

traces/space1:

receivers: [routing]

exporters: [otlp/space1]

traces/space2:

receivers: [routing]

exporters: [otlp/space2]Configuration Breakdown

Extensions

Headers Setter: Manages authentication credentials for each Arize project. Each headers_setter extension is configured with the specific space_id and api_key for its target project.

Processors

Transform Processor: Extracts span attributes (metadata.project_name, metadata.space_id) and promotes them to resource attributes, making them available for routing decisions.

Connectors

Routing Connector: The core component that makes routing decisions based on resource attributes. It evaluates conditions and directs traces to the appropriate pipeline. The default_pipelines setting ensures traces without matching conditions are routed to a fallback destination.

Pipelines

The configuration uses a three-stage pipeline approach:

Main Pipeline: Receives traces and applies transformations

Routing Stage: Evaluates conditions and routes to project-specific pipelines

Export Pipelines: Handle final export to specific Arize projects

Application Requirements

For this configuration to work, your application must set the following span attributes:

# Example: Setting routing attributes in your application

span.set_attribute("metadata.project_name", "your-project-name")

span.set_attribute("metadata.space_id", "space_id_1") # or "space_id_2"Deployment Considerations

Sidecar Pattern: Deploy the collector as a sidecar container alongside your application

Credential Security: Store API keys and space IDs as environment variables or secrets

Monitoring: Use the debug exporter to monitor routing decisions during development

Scaling: This configuration can be extended to support additional projects by adding more

headers_setterextensions and routing rules

Last updated

Was this helpful?